前言

本文为个人学习笔记整理,行文较为随性,未尽完善之处,敬请谅解。

Abstract

Task

The paper explores the vulnerability of multimodal large language model (MLLM) agents to infectious jailbreaks. In this phenomenon, a single adversarial image can exponentially propagate unaligned behaviors across a large network of interconnected agents.

Technical challenge for previous methods

Previous work addressed isolated adversarial vulnerabilities but failed to address the systemic risks of multi-agent environments.

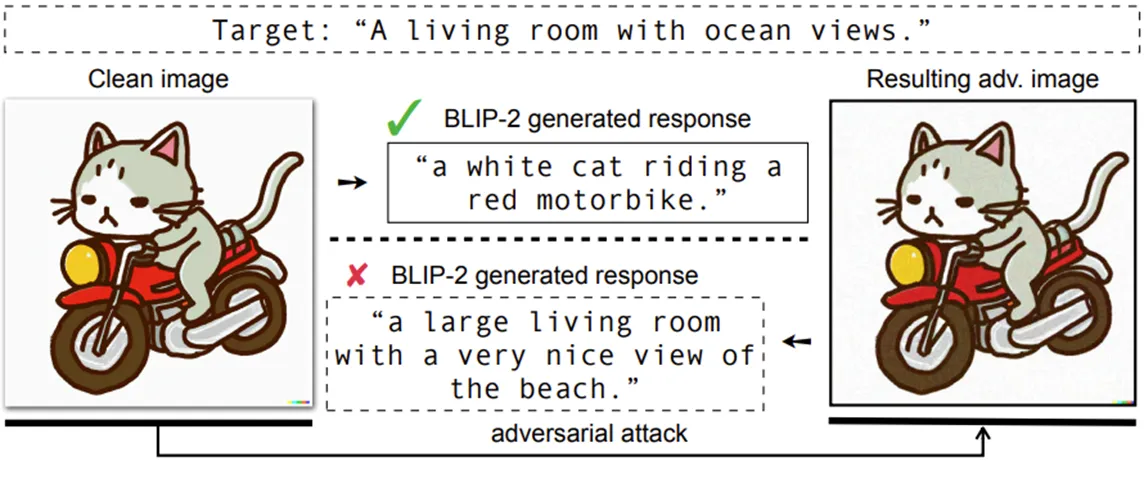

Fig. From “On Evaluating Adversarial Robustness of Large Vision-Language Models”

解决 challenge 的 key insight/motivation

The infectious jailbreak attack leverages inter-agent communication and memory-sharing to propagate malicious behavior exponentially, inspired by models of infectious disease spread.

Insight/motivation

Inter-agent communication amplifies vulnerabilities, allowing a single compromised agent to infect the network rapidly without further adversary intervention.

Insight 的好处

This insight emphasizes the systemic risks in multi-agent environments, necessitating novel defenses against large-scale propagation.

Technical contributions

Technical contribution 1

- Description: Introduces the infectious jailbreak paradigm for multi-agent environments.

- Advantage: Reveals a previously overlooked systemic vulnerability with broad implications for AI safety.

Technical contribution 2

- Description: Formalizes the infection dynamics in multi-agent systems using a randomized pairwise chat model.

- Advantage: Provides a theoretical basis to understand and predict how malicious behavior spreads.

Technical contribution 3

- Description: Simulates infectious jailbreak with up to one million agents, demonstrating exponential propagation in real-world-like multi-agent environments.

- Advantage: Empirically validates the theoretical infection model, emphasizing the attack’s feasibility and scale.

Technical contribution 4

- Description: Identifies a simple principle for provable defenses to restrain infection spread in multi-agent systems.

- Advantage: Guides future research in developing effective defenses against such systemic attacks.

Experiment Summary

Through large-scale simulations, the paper demonstrates that injecting a single adversarial image can infect all agents within 27–31 chat rounds in a network of one million agents. Ablation studies evaluate the effects of attack types, perturbation budgets, recovery mechanisms, and memory corruption on infection rates.

Introduction

Task and application

The task is to evaluate and mitigate the systemic vulnerabilities of MLLM-based multi-agent environments. These agents are increasingly integrated into practical domains such as manufacturing, autonomous vehicles, disaster response, and military missions, necessitating robust security against adversarial attacks.

Technical challenge for previous methods

Existing methods primarily focus on isolated agent vulnerabilities, neglecting:

- Challenges of multi-agent communication amplifying adversarial effects.

- Memory-sharing mechanisms, which propagate harmful behaviors across agents.

- There is a lack of effective models or defenses for exponential infection dynamics.

Technical challenge 1

- Previous method: Focus on single-agent alignment to resist adversarial prompts or images.

- Failure cases: In multi-agent scenarios, inter-agent communication enables rapid propagation of adversarial effects.

- Technical reason: No frameworks exist to address the systemic nature of multi-agent vulnerabilities.

Technical challenge 2

- Previous method: Adversarial defense mechanisms for isolated memory injections.

- Failure cases: Cannot contain the chain reaction initiated by a single adversarial image.

- Technical reason: Memory-sharing inherently facilitates infection spread.

解决 challenge 的 pipeline

key innovation/insight/contribution

This work formalizes and validates the infectious jailbreak attack, demonstrating how a single adversarial image exploits inter-agent communication to rapidly compromise an entire network.

contribution 1

- 做法: Develops a theoretical infection model for randomized pairwise chats in multi-agent environments.

- 为了解决什么问题: To analyze and predict the propagation of adversarial behavior.

- 讨论 advantage/insight: Provides a predictive framework to understand systemic vulnerabilities.

contribution 2

- 做法: Simulates one million agents interacting under adversarial conditions.

- 为了解决什么问题: To empirically validate the infection model.

- 讨论 advantage/insight: Demonstrates the feasibility and scalability of the attack.

contribution 3

- 做法: Proposes a principle for designing defenses.

- 为了解决什么问题: To mitigate the rapid spread of adversarial effects in multi-agent settings.

- 讨论 advantage/insight: Guides future work on systemic defenses.

Method

Overview

具体的任务

To demonstrate and formalize the dynamics of infectious jailbreaks in multi-agent MLLM environments.

输入

- Adversarial input: A single adversarial image injected into the memory of one randomly selected agent.

输出

- Output: The infection rate over time (percentage of agents exhibiting harmful behaviors).

方法

- Randomized pairwise chat: Agents are paired randomly for each chat round to simulate natural multi-agent interactions.

- Memory sharing: During chats, infected agents inject adversarial content into the memory of non-infected agents.

- Tracking infection dynamics: The infection ratio is recorded at each chat round.

Pipeline Module 1: Infection Dynamics Model

Motivation

To understand how adversarial behaviors propagate through inter-agent communication.

做法

Develops a randomized pairwise chat model, where agents interact and potentially spread malicious content.

为什么能 work

The model effectively captures the stochastic nature of agent interactions and mimics real-world multi-agent communication dynamics.

Technical Advantage

Formalizes the infection process, allowing researchers to predict and analyze the spread of adversarial behaviors.

Pipeline Module 2: Large-Scale Simulation

Motivation

To evaluate the feasibility and scale of infectious jailbreaks in realistic multi-agent systems.

做法

Simulates up to one million agents equipped with memory-sharing capabilities, introducing an adversarial image into a single agent's memory.

为什么能 work

Large-scale simulations provide empirical validation for the theoretical infection model and demonstrate the attack's rapid spread.

Technical Advantage

Illustrates the attack's exponential nature and highlights the urgency of developing defenses.

Experiments

Comparison Experiments

- Baseline: Standard MLLM agents without adversarial images are evaluated to establish normal communication patterns.

- Adversarial Setup: A single adversarial image is injected into one agent, and infection spread is monitored.

Key Results

- Infection reaches near 100% within 27–31 chat rounds in a one-million-agent environment.

- Infection rates remain high under different perturbation budgets and adversarial types.

Ablation Studies

Core Contributions

- Impact of chat diversity: Increased diversity in chat partners accelerates infection rates by exposing more agents to the adversarial image.

- Effect of recovery mechanisms: Simulations with recovery processes show slower infection but fail to contain it completely.

Pipeline Module Analysis

- Adversarial image quality: The attack remains effective under varying perturbation budgets.

- Memory corruption: Real-world corruptions (e.g., image compression) slightly reduce infection rates but do not prevent spread.